unsafe code is not only tricky to get right. It’s also tricky to track down when things go wrong.

Here’s a question to ponder: do you think you are experienced enough at Rust to use unsafe code properly? If you asked me 24 hours ago, I would confidently say “yes, I am.” I would describe my Rust experience level as at least intermediate. On top of that, I have a little bit of an ego.

In fact, I fully support using unsafe in libraries! Many times, there’s no other way to do something!

- You’re communicating with a system API or C library. Although, for the former, I would recommend seeing if using

rustixor another system interface is an option. - You’re doing something that the borrow checker or type system can’t fully check your work on, even with workarounds like

RefCellorAny. - There’s just some performance boost that’s simply not possible without an

unsafeworkaround. I would strongly recommend benchmarking this claim before implementing it in a publicly used crate.

While I’m not against unsafe, I am for really checking and validating that unsafe. You need to be making sure it’s doing what it needs to be doing, and that it’s doing that thing right. The hazard with unsafe isn’t just that it can break your crate. It’s that it can break other crates, in a way that’s very, very difficult to trace back to your original crate.

You know how I said I had a chip on my shoulder? In the past week, I’ve had two soundness errors reported. For the same crate, no less!

One of those soundness errors is a fairly routine linked list rigamarole with an associated discussions about public API. The other one is a frantic chase through several different crates, where things are never as they seem. This blog post focuses on the second one, as I find it more entertaining and maybe we can even shoehorn a moral in there somewhere.

So let’s ask ourselves: what do we open ourselves up to when we add unchecked unsafe to our code.

I mention crates that are created by and maintained by other people in this post. They have done nothing wrong: all of the bugs were introduced by me, notgull. It should go without saying, but please do not seek out or harass these other people.

Event Listener Escapade

Let’s introduce our cast of characters.

event-listener is a fairly primitive crate in the smol ecosystem. It’s main responsibility is communications between tasks; it essentially handles the “if one thing happens in a task, wake up another task” use case. It’s used to implement locks and channels, among other things.

event-listener also has a lot of unsafe code. In my opinion, this is a good thing: it means that other crates that depend on it don’t need to have that unsafe code for themselves. I’ll also be the first to admit that a lot of the unsafe code is unnecessary. Some of it exists because we’ve benchmarked it to be faster, and some of it exists because there isn’t really a better way to implement, say, a limited-contention spinlock in Safe Rust.

A few days ago, one of our maintainers reported a bug. Most of the time, soundness bugs have a specific flavor, like bitter licorice. This bug didn’t have that flavor, at first glance. It was like biting into the sweet apple of a simple off-by-one error, with an aftertaste of smooth, smooth WebAssembly. One fateful day, GitHub Actions started failing with a strange error.

$ wasm-pack test --node --no-default-features --features portable-atomic

Running tests/notify.rs (target/wasm32-unknown-unknown/debug/deps/notify-0ef5209d8abd9615.wasm)

Set timeout to 20 seconds...

Executing bindgen...

running 9 tests

panicked at /home/runner/work/event-listener/event-listener/src/no_std.rs:639:48:

index out of bounds: the len is 0 but the index is 4

Here is the full log, in case you want to try to take a crack at this bug for yourself.

Debugging Demonology

At first glance, I ran this code on my laptop and wasn’t able to replicate the issue.

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests pass>

I took one glance at the WASM bits and the portable-atomic feature and decided that it must be some kind of strange one-in-one-thousand race condition. It was a Thursday and I had work to do, so I decided to revisit it on the weekend. The weekend came and, after re-running the Actions workflow, the bugs were still there.

Weird, I thought to myself. If it was a race condition, it shouldn’t be happening consistently. So I needed to take a closer look at what was happening here.

While reading through the logs, I realized that the list that the out-of-bounds error was happening on was initialized like this:

/// Create a new, empty list.

pub(crate) fn new() -> Self {

Self {

listeners: alloc::vec![Entry::Sentinel],

<snip: irrelevant fields>

}

}

This list never has elements removed from it. It works a lot like the slab crate does; it leaves those slots open for future entries to fill. Remember that “out of bounds” error?

index out of bounds: the len is 0 but the index is 4

“len is 0”? The length of this list can only ever be one or more. So unless I’m accidentally calling remove() or truncate() on that list at some point, the length should never be zero.

On top of that, I noticed this little detail in the logs as well, for another failed test:

called `Result::unwrap()` on an `Err` value: Full(Notify { count: 1, is_additional: true })

This operation results from a concurrent queue push operation, where the queue is initialized like this:

pub(super) fn new() -> List<T> {

List {

queue: concurrent_queue::ConcurrentQueue::unbounded(),

<snip: irrelevant fields>

}

}

Unbounded queues can never be full. It doesn’t happen; they just allocate more memory to make room. At this point I curled my nose as the bitter aroma of memory corruption started to waft my way.

“Maybe it’s a rustc bug” I say to myself, still in the “denial” phase. I had to keep digging deeper; hopefully I can find the actual cause of the error and set up a minimal reproduction of it.

Damaged Dependencies

At this point, it occurred to me that one of the differences between my machine and the Actions virtual machine is that I have a Cargo.lock with some older dependencies in it. In most of my projects I don’t commit Cargo.lock to Git, even though it’s the default now.

So I back up my current Cargo.lock as a “last good state” (foreshadowing for later) and try the tests again.

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests succeed>

$ cargo update

Updating crates.io index

Updating concurrent-queue v2.3.0 -> v2.4.0

Updating crossbeam-utils v0.8.16 -> v0.8.17

Updating futures-lite v2.0.1 -> v2.1.0

Updating itoa v1.0.9 -> v1.0.10

Updating js-sys v0.3.65 -> v0.3.66

Updating libc v0.2.150 -> v0.2.151

Updating once_cell v1.18.0 -> v1.19.0

Updating portable-atomic v1.5.1 -> v1.6.0

Updating proc-macro2 v1.0.69 -> v1.0.70

Updating ryu v1.0.15 -> v1.0.16

Updating serde v1.0.192 -> v1.0.193

Updating serde_derive v1.0.192 -> v1.0.193

Updating syn v2.0.39 -> v2.0.41

Updating wasm-bindgen v0.2.88 -> v0.2.89

Updating wasm-bindgen-backend v0.2.88 -> v0.2.89

Updating wasm-bindgen-futures v0.4.38 -> v0.4.39

Updating wasm-bindgen-macro v0.2.88 -> v0.2.89

Updating wasm-bindgen-macro-support v0.2.88 -> v0.2.89

Updating wasm-bindgen-shared v0.2.88 -> v0.2.89

Updating wasm-bindgen-test v0.3.38 -> v0.3.39

Updating wasm-bindgen-test-macro v0.3.38 -> v0.3.39

Updating web-sys v0.3.65 -> v0.3.66

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests fail again>

“Aha! It’s one of the dependencies!” I shout aloud to myself, fully aware no one is listening. “It’s not my fault!”

Put yourself in my shoes for a second. It’s probably portable-atomic, right? I mean, the error only happens when portable-atomic support is enabled. It’s a crate that adds a polyfill for the core::sync::atomic module that works on embedded platforms without atomics. It’s very thorough and has support for many different architectures and platforms. Since it has a lot of unsafe, that makes it very easy to blame.

So, let’s update it and see what happens.

$ cp Cargo.lock.old Cargo.lock

$ cargo update portable-atomic

Updating crates.io index

Updating portable-atomic v1.5.1 to v1.6.0

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests succeed>

The tests passed… I’m sorry, what?

So it’s a dependency update… but not in portable-atomic? Weird, I guess I can run through the other dependencies we updated and see which one is the squeaky wheel. Let’s start with concurrent-queue, it seems innocent enough.

$ cp Cargo.lock.old Cargo.lock

$ cargo update concurrent-queue

Updating crates.io index

Updating concurrent-queue v2.3.0 -> v2.4.0

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests fail>

At this point, I did a double take at my monitor. What?

concurrent-queue is a smol crate that provides a queue that can be accessed concurrently. It’s significantly more efficient than having a Mutex around a non-synchronous queue, especially when multiple threads are pushing to or reading from the queue. It also has a lot of unsafe code which, under normal circumstances, I would be happy to blame.

But, I was the one to push that update from v2.3.0 to v2.4.0. The only real change was getting rid of a heap allocation. This didn’t add or remove any unsafe code; it just moved some fields onto the stack instead of the heap… actually, it was still in the heap, since in event-listener the queue was wrapped with an Arc, which allocates on the heap.

Still, if you’re looking for someone to blame, concurrent-queue seemed like it was caught red-handed. The build broke when I updated it, that was the smoking gun!

Goose Chase Gallery

My attention was piqued when I noticed that I’d forgotten to add WebAssembly tests for concurrent-queue. If this was a concurrent-queue issue, it would be hidden by these lack of tests. My suspicions were confirmed when I ran tests using wasm-pack and saw that, yes, they were failing with portable-atomic being enabled.

I began to settle in for the grueling task of reviewing atomics to make sure that all of the operations were correct. Especially for a crate as intricate as concurrent-queue, this could take weeks. Except… let me check those tests again.

Oh wait, the only tests that are failing are the ones that try to spawn threads. Of course they fail, you can’t spawn threads on WASM! Let’s mask those tests out, and..

Wait, the tests work? Hey, how’s that possible?

Maybe… maybe the leftover tests aren’t good enough. Yes, maybe the bug I’m looking for isn’t covered by these tests, so I need to write more tests. So I need to finally figure out how web workers work. Then I’ll need to rewrite my tests, nay, my test harness, to properly run all of these test cases?

Alternatively, maybe we have the wrong guy.

miri Magic

Have you ever watched a police procedural? Usually, they play a trick on you in the middle of the episode. A crime happens, and after some detective work they find a guy who looks like they did it. They have evidence! The smoking gun! But our protagonist, a rookie cop who’s flexible about his position on torturing civilians, doesn’t think they did it. But their completely reasonable qualms that might come up during trial are ignored by the rest of the police force. Then the protagonist confronts the actual perpetrator, who’s usually some poorly developed background character, and brings him to justice.

In this metaphor I’m the police force, and I thought I’d caught concurrent-queue red-handed. But something didn’t sit right.

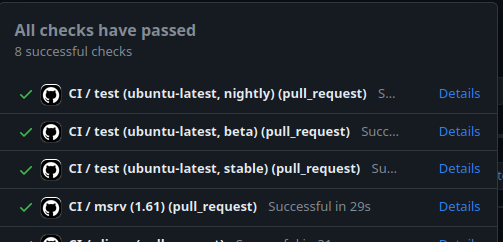

Before I got too deep into concurrent-queue’s innards, I realized something. In my CI, I wasn’t running miri tests for the case where portable-atomic was enabled. This meant that, if this was a memory corruption error, it wasn’t being caught by miri.

This introduced a thought into my head for the first time, a thought I should’ve had three days ago. Maybe it isn’t strictly a WASM issue?

So I ran miri with portable-atomic support, and sure enough:

$ cargo miri test --no-default-features --features portable-atomic --tests

<snip: miri noise>

test drop_non_notified ... error: Undefined Behavior: constructing invalid value: encountered an unaligned reference (required 128 byte alignment but found 16)

--> /home/jtnunley/.rustup/toolchains/nightly-x86_64-unknown-linux-musl/lib/rustlib/src/rust/library/core/src/ptr/mut_ptr.rs:367:57

|

367 | if self.is_null() { None } else { unsafe { Some(&*self) } }

| ^^^^^^ constructing invalid value: encountered an unaligned reference (required 128 byte alignment but found 16)

|

= help: this indicates a bug in the program: it performed an invalid operation, and caused Undefined Behavior

= help: see https://doc.rust-lang.org/nightly/reference/behavior-considered-undefined.html for further information

= note: BACKTRACE:

= note: inside `std::ptr::mut_ptr::<impl *mut event_listener::Inner<()>>::as_ref::<'_>` at /home/jtnunley/.rustup/toolchains/nightly-x86_64-unknown-linux-musl/lib/rustlib/src/rust/library/core/src/ptr/mut_ptr.rs:367:57: 367:63

note: inside `event_listener::Event::try_inner`

--> /home/jtnunley/Projects/smol-rs/event-listener/src/lib.rs:443:18

|

443 | unsafe { inner.as_ref() }

| ^^^^^^^^^^^^^^

Specifically, I noticed this error:

required 128 byte alignment but found 16

What this is telling me is that we are trying to dereference a pointer that isn’t properly aligned. So, where is this pointer coming from?

/// Return a reference to the inner state if it has been initialized.

#[inline]

fn try_inner(&self) -> Option<&Inner<T>> {

let inner = self.inner.load(Ordering::Acquire);

unsafe { inner.as_ref() }

}

Okay, this is just the pointer we’re storing in the self.inner atomic variable. How are we calculating that pointer?

// Allocate the state on the heap.

let new = Arc::new(Inner::<T>::new());

// Convert the state to a raw pointer.

let new = Arc::into_raw(new) as *mut Inner<T>;

// Replace the null pointer with the new state pointer.

inner = self

.inner

.compare_exchange(inner, new, Ordering::AcqRel, Ordering::Acquire)

.unwrap_or_else(|x| x);

So, inner is just the return value of an Arc allocation? Weird. The standard library’s types are usually pretty well-tested. Not to mention, I’m sure that other programs make use of dereferencing the into_raw too, and they would have seen this error before me right now. Let me check my imports…

Oh no.

#[cfg(feature = "portable-atomic")]

use portable_atomic_util::Arc;

Oh no.

Arc Apocalypse

portable-atomic-util is a sort of “side crate” to portable-atomic. It’s where you put things that are too specific to be put in portable-atomic, but are probably still useful.

One of the tools in portable-atomic-util is an implementation of Arc. It’s similar to the one in the standard library, but instead of using atomics from the standard library it uses portable-atomic. Unfortunately it’s not a 1:1 copy of the one from the standard library, as the standard library makes use of unstable features that can’t be used from normal library crates.

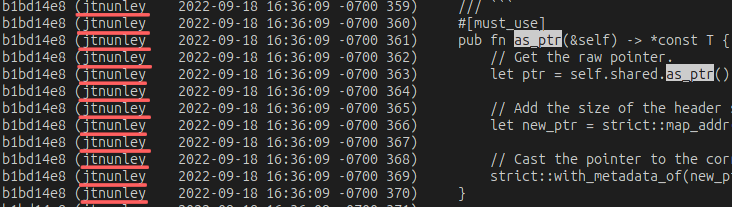

So, let’s take a look at its into_raw implementation. It looks a little like this:

// The inner heap allocation of an `Arc`.

#[repr(C)]

struct Shared<T: ?Sized> {

/// The reference count of the `Arc`.

header: Header,

/// The value that is being reference counted.

value: T,

}

impl<T> Arc<T> {

#[must_use]

pub fn as_ptr(&self) -> *const T {

// Get the raw pointer.

let ptr = self.shared.as_ptr() as *mut u8;

// Add the size of the header so that it points to the value.

let new_ptr = strict::map_addr(ptr, |addr| addr + mem::size_of::<Header>());

// Cast the pointer to the correct type.

strict::with_metadata_of(new_ptr, self.shared.as_ptr() as *mut T)

}

}

It’s pretty simple. Our shared pointer points to an instance of the Shared structure on the heap, and we want it to point to the value field. That way, when the user dereferences the pointer, they get the underlying T.

Unfortunately we can’t use addr_of_mut like the standard library yet. It’s stable, but portable-atomic-util’s MSRV is lower than the version where it was introduced. So we do the math manually. The value will be after the Header, so just add the size of the Header to get the pointer. Right?

Wrong.

What the author of this code failed to consider is alignment. A lot of structures need to have their address be a multiple of a certain number, usually the size of their largest field. The reasons for this are related to the underlying CPU architectures and I can’t really get into that. This article is looking pretty long as it is.

However, the ConcurrentQueue type has an alignment of 128. This is related to how cache lines are implemented on the x86 architecture. When you have frequently changed atomic data, you want to put in a separate cache line than other data. That way, the processor won’t need to reload that other data too frequently. No, we really don’t have time.

So, it is possible for Shared above to be laid out with the Header at the start, then have some number of padding bytes before the actual value field. This means that the pointer addition from above is now pointing into padding, rather than the actual T value. That explains the memory corruption.

It also explains why it’s only started to cause undefined behavior now. In v2.3.0 of ConcurrentQueue, the data with an alignment of 128 was stored inside of a heap allocation. Since heap allocations have an alignment of the platform word size (in the case of 64-bit architectures, 8), there was no padding between the Header and the value here. It was only when that heap allocation was removed and the alignment was increased that this problem appeared.

Say, who wrote this code? I’ll need to find them and give them a piece of my mind. git blame can tell us who, exactly, is responsible for this mess.

Egads! It was me all along!

This bug is no longer in portable-atomic-util. I filed a PR which has now been merged, and the versions of portable-atomic-util with that bug have been yanked from crates.io.

To come full-circle, let’s see if our tests work with the newest version of portable-atomic-util.

$ wasm-pack test --node --no-default-features --features portable-atomic

<snip: tests succeed>

Awesome!

Parting Shots

So, what’s my conclusion? What did we learn from this little escapade?

I’d like to bring your attention to the source of the original soundness bug.

#[must_use]

pub fn as_ptr(&self) -> *const T {

// Get the raw pointer.

let ptr = self.shared.as_ptr() as *mut u8;

// Add the size of the header so that it points to the value.

let new_ptr = strict::map_addr(ptr, |addr| addr + mem::size_of::<Header>());

// Cast the pointer to the correct type.

strict::with_metadata_of(new_ptr, self.shared.as_ptr() as *mut T)

}

Eagle-eyed readers might have noticed that this code has no unsafe. Not even a line. Sure, it does some risky pointer math, but that’s considered safe in Rust.

Also, keep in mind that concurrent-queue had no bugs introduced either. It just changed the alignment of a structure from 8 to 128. That can be done in safe Rust; hell, it isn’t even a minor change according to SemVer.

Here is the actual unsafe code that caused this unsoundness.

#[inline]

fn try_inner(&self) -> Option<&Inner<T>> {

let inner = self.inner.load(Ordering::Acquire);

unsafe { inner.as_ref() }

}

It’s not unsound; it’s not even incorrect. This code was checked, audited and did everything that it was supposed to do. It was a combination of two pieces of 100% safe code from another crate that caused this bug to happen.

When I begun this article, I talked about how you need to check your unsafe code. What I wanted to prove is that you can’t just check your unsafe code. You need to check each and every line of safe code too. Safety is non-local, and a bug in safe code can easily cause unsound behavior in your unsafe code if you’re not careful.

For me, I’m going to be extra careful when I write new unsafe logic. I advise that you do the same.